Application: Analyzing recursion

Counting can sometimes be used to analyze the running time of recursive algorithms. For instance, look at the following recursion. Let be some positive integer.

and . Intuitively, the program, when given an input of size , calls two subroutines on smaller inputs (of sizes and respectively). Do you know how to prove a reasonable upper bound on the running time of this program?

For sure, we know that (we can use the fact that and then this becomes which solves to at most ). Can we do a better analysis?

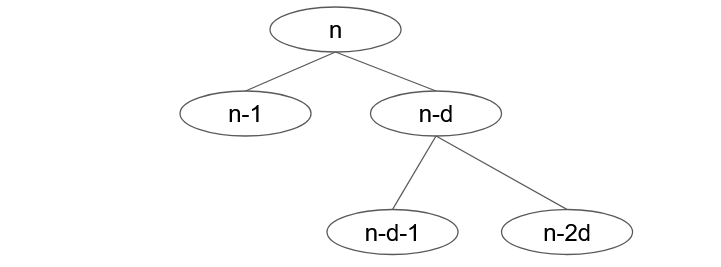

If we represent an execution of this algorithm as a recursion tree, it would look like Figure 13, where we would be interested in counting the number of leaves of this tree. In the tree, each node is labelled by the size of the instance and when a node with label is making recursive calls to sub-instances, this node would have two children of labels and respectively (as its left and right children).

To illustrate the idea of counting (in a somewhat sloppy but intuitive way), we can view each leaf as a sequence , denoting the left or right turns that are taken from root towards it. Notice that . Moreover, the letter can appear in the sequence at most times (do you see why?). This implies that we have at most leaves. We will see later that this number is at most .

Turn the previous sloppy proof into a real, formal proof 😊